By Julie Mandolini-Trummel, CLAME Specialist, and Alison Harrell, CLAME Specialist, Headlight Consulting Services, LLC

This blog post is the first in a 3-part series on Developmental Evaluations. In Parts II and III of this blog series, we will explore how to identify if a DE is a good fit for your project/activity and how DE Administrator responsibilities shift when a DE is locally-led.

Introduction

Have you heard of Developmental Evaluation (DE) but been confused about exactly what it is and how it differs from traditional performance evaluations? Have you heard that it is an optimal approach for complex environments and is highly adaptive, but feel unclear about what this looks like in practice? Read on if you’ve answered “yes” to any of these questions!

In part I of this blog series, we will demystify DEs and hopefully help practitioners feel less intimidated by this evaluation approach. This blog provides a high-level overview of what a DE is, what it is not, what it looks like in action, and key resources if you are interested in learning more, drawing upon Headlight’s experience carrying out several large-scale, multi-year DEs.

What is Developmental Evaluation?

DE is an evaluation approach that facilitates continuous, data-driven adaptations of interventions in complex environments. It differs from typical evaluations in a few ways:

- DEs have a Developmental Evaluator embedded1 alongside the implementation team while maintaining independent reporting and management lines for objectivity;

- DEs emphasize rapid data collection, analysis, and reflection for evidence-based decision-making and effective adaptation of strategy, operations, and activities in real-time;

- DEs are methodologically-agnostic, meaning that methodologies and analytical techniques are based on the learning and evaluation questions, which change throughout the project/activity’s implementation to meet emergent information and evidence needs.

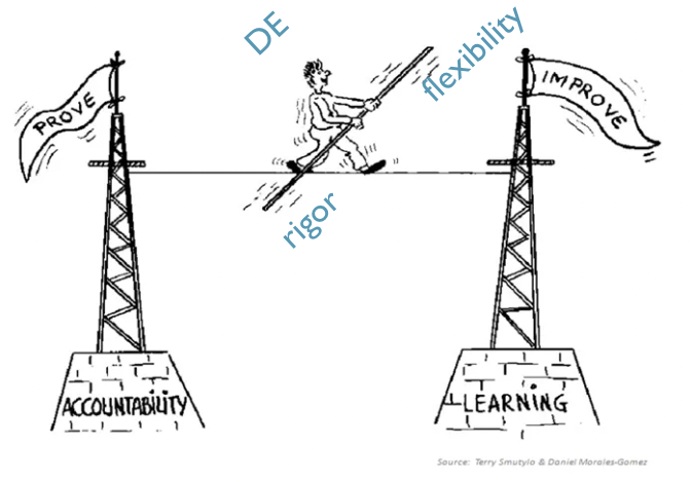

This learning-focused approach balances rigor and flexibility in methodological choices while constantly working toward project/activity improvement (see the image below).

What is a DE not?

There are many unique aspects of a DE, which makes it essential to be clear-eyed about what a DE is not. This helps to ensure that DEs are applied appropriately and that the collaborative and iterative nature of this type of evaluation is understood by all stakeholders. We have found that direct comparison between traditional evaluation approaches (i.e., performance evaluations and impact evaluations) and DEs is an effective way to broaden people’s understanding.

| Traditional Approaches | Developmental Evaluation | |

|---|---|---|

| Purpose | Supports improvement, summative test, and accountability | Supports the development of innovation and adaptation in dynamic environments |

| Standards | Fixed methodology, independent, credibility with external authorities | Methodological flexibility and adaptability; systems thinking, creative and critical thinking balanced; high tolerance for ambiguity; ability to facilitate rigorous evidence-based perspectives |

| Options | Traditional research and disciplinary standards of quality dominate options | Utilization focused: options are chosen in service to developmental use |

| Deliverables | Detailed formal reports | Rapid, real-time feedback; diverse, user-friendly forms of feedback |

As detailed in the table above (adapted from Gamble, J. A. A., and J. W. McConnell Family Foundation. (2008). A Developmental Evaluation Primer), DEs are distinct from traditional evaluation approaches, but the purpose and evaluation questions of a DE can be designed to meet the requirements of a performance evaluation. For USAID implementing organizations, this means that while DE is not technically a performance evaluation, DE is an approved performance evaluation approach, per the Automated Directives System (ADS) 201, and is aligned with executing the 2018 Evidence Act per OMB Memorandum M-20-12.

What Does Implementing a DE Look Like?

There are two main phases of a DE: Phase 1 Start-up and Acculturation, and Phase 2 Implementation. There is no prescriptive length for a DE – it is driven by the needs and overall timeline of the project/activity; however, we recommend implementing a DE for at least 18 months to receive the value-add of rapid feedback loops and adaptation cycles.

During the Start-up and Acculturation Phase (which generally runs 3-9 months, depending on complexity), scoping the DE, onboarding key staff (DE evaluators and administrators), and setting up administrative systems and processes begin. While acculturation (i.e., orientation and buy-in to the DE) starts in this first phase, the DE Team consistently works to increase and maintain buy-in among internal and external DE stakeholders throughout implementation.

PHASE 1: Start-up and Acculturation

| Illustrative Activity/Deliverable | Purpose |

| Review and synthesize existing evidence | To ensure what works or has been attempted in the space is fully understood, the DE learning questions focus on unknowns and evidence gaps. This could include a Learning Review, Desk Review, or Literature Review, depending on the needs demonstrated. |

| Identify DE Stakeholders | To identify who will be involved in the DE and early champions who can advocate and help develop buy-in from other stakeholders. |

| Facilitate an acculturation workshop(s) | To gather key stakeholders, introduce the concept of DE, describe DE implementation, clarify roles and collaboration, and co-design DE learning questions. |

| Prioritize DE learning questions | To determine the prioritized order of the DE learning questions identified during the acculturation workshop and conduct any consolidation, refinement, or synthesis before moving to evaluation design. |

In the Implementation Phase, the DE Team works with stakeholders to articulate and test their programmatic assumptions, implement evaluative efforts, and present the evidence to facilitate stakeholders from understanding evidence to taking adaptive actions. Throughout the project/activity, the DE team works to cultivate buy-in among DE stakeholders and remains embedded in the project/activity participating in the DE.

Phase 2: Implementation

| Illustrative Activity/Deliverable | Purpose |

| Continuously collect, code, and analyze data on the DE learning questions | To answer the DE learning questions and inform upcoming decision-making (e.g., about activity design choices, resource allocations, etc.) or emergent needs. |

| Create and share use-focused analysis and products | To present high-quality, accessible evidence that answers evaluation questions, informs what works in achieving project/activity outcomes, and facilitates the uptake of best practices by stakeholders. |

| Organize and facilitate actionable strategic learning debriefs and support adaptive actions | To synthesize and share lessons learned from the evaluative efforts, build action plans based on the recommendations, and support implementation and track the resulting adaptations from the DE’s evaluative and learning efforts. |

While the wrap-up and closeout of the DE is a distinct phase, implementing with a focus on sustainability, local ownership, embeddedness, and capacity building allows the DE team to more seamlessly hand over the responsibilities to DE stakeholders when the DE is complete.

Closing

DEs are a useful tool in your evaluation toolbox when working on a project/activity with a high level of complexity. DEs have many distinct characteristics compared to traditional evaluations, like embeddedness and the emphasis on iterative learning. The DE approach supports timely feedback, facilitates moving evidence to action via collaboration with DE stakeholders, and enables continuous adaptation based on evidence. We hope this blog helps you better understand what DEs are, what they are not, and what a DE looks like in action. In Part II of this series on DE, we will explain how to identify if a DE is a good fit for your project/activity. Stay tuned!

If you want to learn more, explore these DE resources gathered by the Headlight team:

Key Resources for Implementing Developmental Evaluations

- Developmental Evaluation (an overview of the method with FAQs)

- Developmental Evaluation Primer (overview of the method; debunks common myths about DEs on pages 20-24)

- DE Principles (core principles of the method)

- Implementing Developmental Evaluation: A Practical Guide for Evaluators and Administrators (step-by-step implementation guidance with tools and templates)

- DE Theory to Practice Article (lessons from three DE pilots)

- Developmental Evaluation Exemplars: Principles in Practice (compilation of DE exemplars)

Examples of Developmental Evaluations

- Sustained Uptake DE: a Developmental Evaluation conducted by the Developmental Evaluation Pilot Activity (DEPA-MERL) for the U.S. Global Development Lab to evaluate the uptake of innovations and scaling pathways at the Lab.

- USAID/Ethiopia Strengthening Disaster Risk Management Systems and Institutions (SDRM-SI) DE: conducted by Headlight Consulting Services LLC, this Developmental Evaluation is working with the USAID/Ethiopia SDRM-SI Project to support the adaptation and refinement of their approach to strengthen the capacity of Ethiopia’s communities and institutions to effectively manage disaster risks.

- DE to Support Knowledge Translation: this paper reflects on the use of DE to implement a large-scale knowledge translation research project in the field of indigenous primary health care.

Questions? Please email us at info@headlightconsultingservices.com

Footnote

- A Developmental Evaluator or evaluation team can be embedded remotely or virtually. Embeddedness is about integration into the day-to-day operations of a team/activity. This can be helped by having the evaluator at a desk next to the implementation team but has been done successfully remotely.

Comments

no comments found.