By: Rebecca Herrington

As researchers and evaluators work to monitor, evaluate, and learn from developments in the field, we often collect qualitative data from beneficiaries and stakeholders via observational interactions and documentation, focus groups, key informant interviews, and more. Many are apprehensive to trust findings and recommendations from qualitative data, and they critique the qualitative methods used for not being rigorous enough. It is essential that MEL practitioners, and the field more broadly, are intentional and honest about the levels of confidence and the strength of findings and conclusions being drawn to ensure appropriate use of evidence and understanding of what we do and do not know. Qualitative methods are not by default less rigorous. They can hold up to high standards of confidence and substantiate findings that contribute meaningfully to evidence. Most of the misconceptions around qualitative methods not having sufficient rigor to use as evidence are due to instances (which are many) of poor application. But there are various ways to ensure the data collection and analysis processes used within qualitative methods are rigorous, meet international best practice standards, and achieve acceptable minimums for sampling and triangulation before putting forward findings and recommendations.

Headlight would like to provide recommendations for evaluators at all levels to strengthen their qualitative work to improve the use of qualitative standards in our field and improve data-driven decision making in international development writ large. We have compiled a 5-part blog series on qualitative rigor tackling everything from integration of rigor at each phase of evaluative efforts to conducting qualitative analysis to writing Findings, Conclusions, Recommendations matrices. This is the first post in the series tackling essential truths of qualitative rigor and how to ensure qualitative rigor in the design phase of an evaluative effort.

Essential Truths of Qualitative Rigor

Given the long history of misconceptions and poor use of qualitative methods, we would like to start the conversation about qualitative rigor by providing four essential truths:

- Qualitative approaches are not just for complex or hard-to-measure efforts. Qualitative data provides explanations of how or why efforts are working, not working, or how things are being influenced, which is applicable learning for all sectors and levels of programming.

- Qualitative approaches are not inherently less rigorous than quantitative approaches, it is all in the application. Qualitative approaches are beholden to sampling saturation rates, comparative sampling, triangulation of findings, and other standards of rigor just like quantitative approaches.

- Qualitative approaches differ for MEL work and programmatic results, and the two should not be mixed when focusing on rigor. There are many qualitative approaches, such as Sensemaking, that are highly beneficial to participants and can be used programmatically to great effect when done in an ethical and contextually-sensitive manner (e.g., having trained, local psychologists on board to protect against and help with processing trauma). These approaches can also be used for learning purposes, but rarely meet the level of rigor necessary to contribute to evidence building. Make clear distinctions between programming and MEL when choosing methods and be earnest about the level of rigor that is possible and needed for each.

- Qualitative approaches require technical expertise to implement correctly. Conducting interviews or focus groups is not a qualitative approach, they are ways of collecting qualitative data. Qualitative rigor is dependent on selecting a method (e.g., Process Tracing, Outcome Harvesting, Most Significant Change, etc.) that is best fit to provide answers to the identified evaluation questions and then ensuring the evaluation team has the technical expertise and capacity to implement the chosen method according to existing standards.

Qualitative Rigor in the Design Phase

There are a multitude of issues with how we currently design evaluations and MEL efforts, qualitative or not. Evaluations are sometimes left as an afterthought, not appropriately resourced to answer questions provided by the donor, or designed by one technical expert but implemented by another team without any handover. Amidst these issues, we can still improve qualitative rigor, and it must start at the design phase. We can improve qualitative rigor through design, by:

- Ensuring we are intentionally selecting the most appropriate method to provide use-focused answers to the evaluation questions, even if that means we need to consult a methodologist who has more comprehensive knowledge of method variations and their relative pros and cons;

- Acknowledging that “mixed methods” is not an evaluation design, but rather a data collection approach, and that selection of an actual evaluation method will provide needed structure, processes, and guidance for successful accountability, learning, and evidence building;

- Choosing fit-for-purpose sampling methods, that acknowledge limitations, with sample sizes determined by sampling saturation rate research (see below); and,

- Designing thorough inception reports, which review existing documentation to appropriately identify evidence gaps and more accurately direct primary data collection efforts.

Sampling Methods

A key component of qualitative rigor is sampling. Sampling methods refer to the process of identifying from whom you will collect data. There are quite a few different methods to determine your sample, and just like the overarching evaluative method, it is important that your chosen sampling method is appropriate for the evaluation questions that have been identified, the type(s) of information you need, and any limitations you might face in data collection. The R&E Search for Evidence blog provided an excellent overview of how to pick the right sampling methods in their 2017 blog, “A pathway for sampling success.”

Sampling Saturation Rates

Beyond the sampling method, it is also crucial to ensure you achieve sampling saturation rates. Saturation, in this instance, refers to the point when incoming data produces little to no new information (Guest et al., 2006; Guest and MacQueen, 2008). There is a wide range of existing research on this topic and most sources agree that at least 6 interviews of a homogeneous group (as defined by the evaluative effort sampling structure) will cover 70% or more of the findings that will emerge from further data collection (Guest et al., 2006). According to Guest et al., 12 interviews will increase that coverage to 92%. I cannot tell you the number of evaluation scopes I have seen with a randomly selected number of interviews or focus groups. Or, when the sample size decision was determined only by resource or time limitations. Qualitative rigor requires representative data from the various stakeholders, and ensuring this takes intentional, reflective decision making around sampling saturation. As a base assumption, Headlight recommends a minimum of 6 interviews per homogeneous group based on the research.

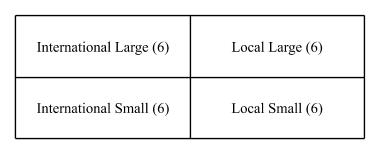

For example, if you were looking at the operational performance of international, local, large, and small NGOs in Kenya, you would need a minimum of 24 interviews to have sufficient confidence of 70% coverage of possible findings– more if you wanted various regional representation in the data. Determining your sample size based on sampling saturations rates is a quick win to add some more rigor to your MEL work!

Coming Up Next

Headlight specializes in qualitative methods and rigor, so if you’d like help on your next qualitative evaluative effort, training for staff on qualitative approaches, or associated technical assistance, reach out to us at <info@headlightconsultingservices.com>. As mentioned, this is the first blog of a 5-part series on qualitative rigor, so tune back in on August 3rd for our next blog covering tips for ensuring qualitative rigor throughout implementation, coding, and analysis of evaluative efforts!

Comments

no comments found.